A Metal Renderer in Swift Playgrounds (Part 3)

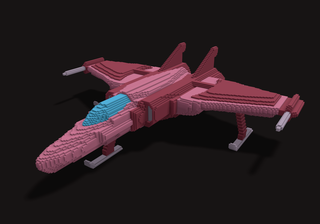

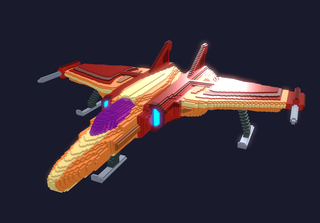

Voxel Spaceships

The next feature I’ve decided to add is model loading. Apple have out of the box code that is capable of loading Wavefront Obj files which is what I plan to use for the first forays into this area. In 2021 I made some experiments with iPad 3d modelling and used Voxel Max to create a few things with voxels. I made a spaceship that I have exported from Voxel Max and this will be the first model I attempt to load. I have called the model “Vox Siderum” which means voice of the stars because it sounds cool, is a spaceship, vox is part of voxel, it’s Latin, and I’m a massive nerd.

Vox Siderum started out as an experiment at drawing anything in Voxel Max. I quite liked the shape of it so I double the resolution and carved out higher resolution detail. The mirror mode was a fantastic timesaver for this! Now I had the higher resolution version of the ship I recoloured it, taking advantage of the PBR material system provided by Voxel Max, adding reflective and emissive surfaces. As stated, I made the model some time before the renderer, and it’s a happy coincidence that I want to implement rendering features used by a model I had already created.

Loading Wavefront OBJ Models

I’ve had a few late nights getting model loading working. Unfortunately the Apple library code that can load models only exposes the data as a buffer of bytes and accessing the structured data is not possible. The intention is that you can pass a model directly to Metal but in my case I need to calculate the face normals as the obj exported by Voxel Max doesn’t include these. I could do this offline and may choose to do so at a later stage. However since I know a little of the obj file format I thought it would be a good exercise to load and parse the data myself, exercising some vanilla Swift and also taking a look at the iOS file loading API.

For the model loading I read each line as a string and stored it in a map by its type. The Voxel Max obj export contained only faces, vertex positions, and texture coordinates. I then treated through the faces and read the vertex position and texture coordinates corresponding to the three vertices of the face and constructed a representation of a face from this. I used regular expressions to extract the data from each line. There doesn’t appear to be a native way of doing regex with Swift, with some NS* classes providing the functionality. The API is a bit cumbersome so I wrapped the matching functionally with a simpler interface.

With my internal representation of a face I also calculated the face normal and added this code to swift-math. Once I had the model loaded I created a pipeline based on the single colour shader I used for icosahedron. I was able to load in the model of the spaceship from Voxel Max and draw it to the screen with simple lighting applied. There were a few glitches in the model - particularly around the skids where parts of it were inverted or in the wrong place. After double checking my code I opened the model file up in vim and scanned through it. Fairly quickly I saw that there were a few coordinates which were specified as an exponent and my regex was not matching only part of the number. Coordinates that were very close to 0 were the most commonly affected which is why the skids which were at the very bottom of the model were also most commonly affected. I fixed this and then moved on to texture mapping.

Loading the texture was trivial as I could reuse the code already written, and I was able to verify it worked by applying the texture to a cube face and comparing to the actual image. The application to the model did not go so smoothly however and the texture did not align with the model. I spent some time looking at whether I was loading and passing texture coordinates correctly but this couldn’t have been to far wrong since even though the texture was wrong it still showed as pixelated along the plane of the model. Given this I figured it must either be inverted or rotated. The right answer was the y texture coordinate was the inverse of how my texture was set up. Inverting this at load time resolved the issue.

I ended up with a correctly loaded obj model, with lighting and texture mapping. As with other objects in the scene I am able to specify a model transformation to scale, rotate, and translate it. The scene consists of the following objects spinning in various ways:

- A triangle with primary colours interpolated from its vertices.

- Cubes with primary colours interpolated from their vertices.

- Cubes with textures that have been generated or loaded from a file.

- A subdivisible icosahedron made up of a single colour.

- The loaded spaceship model.